Air quality monitoring is relatively new in the commercial space and we’ve seen a number of issues and concerns that are remarkably similar to those we faced in the early days of air quality monitoring in the industrial space.

This article provides a brief history of the developments of air quality instrumentation in the cleanroom space. It appears that we’re at the beginning of similar developments for air quality instrumentation within the commercial space. In fact, we’re already seeing many of the key players in indoor/outdoor air quality raising many of these same issues today.

This article provides a brief history of the developments of air quality instrumentation in the cleanroom space. It appears that we’re at the beginning of similar developments for air quality instrumentation within the commercial space. In fact, we’re already seeing many of the key players in indoor/outdoor air quality raising many of these same issues today.

For the purposes of this article I’ll only discuss particulate monitoring, since it’s what I’m most familiar with but similar issues exist with gas sensors, and other environmental sensors to some degree.

Particle counting as an industry was born largely to address yield problems in semiconductor manufacturing. The explosion of semiconductors in the 1960-1970s revolutionized the world, circuitry that prior to this was large, bulky and drew loads of power was being quickly replaced by miniature solid-state circuits produced at fractions of the cost. The miniaturization though meant the very small geometries in these circuits could be compromised by airborne (or liquid borne) particulates inadvertently deposited on the surface of the circuitry during the manufacturing process.

Particulates above a specific size (dictated by the process geometries of the devices being produced) could cause shorts or opens within these circuits causing them to malfunction or fail. Since many of these circuits were manufactured simultaneously (on the same silicon wafer) even short-lived air quality issues could destroy large numbers of these devices.

In order to ensure that particles above a specific threshold size were kept to an absolute minimum, semiconductor manufacturers built large, expensive cleanrooms with extensive filtration to attempt to ensure that the air in their manufacturing environments was kept as free of particulates above that threshold size as possible.

Of course, installing filtration was no guarantee that this would indeed be the case. There are lots of ways that filters can fail and that clean environments can be compromised, so it was an ongoing battle to ensure that the environment was kept within the required tolerances for the process in question. The major tool that was used to ensure this was the optical particle counter.

Simplistically, these instruments sample the air in an environment by passing it carefully through a chamber where a light (typically a laser) shines a ribbon of light through which the air passes. If the air is perfectly clean it pass-es through the ribbon without scattering any of that light. However, when particles are present they scatter light as they pass through the beam, the amount of light scattered being proportional to the size of individual particles. A detector would measure the scattered light and update one or more internal counters based on the amount of light for that particle. So, in this way an instrument could count all the particles passing through an instrument and report on how many particles were seen during a sample period, sorting them in various size bins (or channels).

The manufacturer could then look at these readings and determine whether the quantity of particulates above their specified thresh-old was acceptable. Having this information in real-time (as opposed to capturing a sample of air and sending it off for analysis) allowed them to more tightly control their process and respond much more quickly to events, saving huge sums in many cases.

The early instruments were very crude, incorporating only discrete logic chips (no controllers or onboard processors) they would simply have manually calibrated thresholds that a technician would adjust, that would drive binary counters and then periodically shift these counts to small numeric displays before resetting themselves and restarting. There was no logging, no control, and very few features.

The early instruments were very crude, incorporating only discrete logic chips (no controllers or onboard processors) they would simply have manually calibrated thresholds that a technician would adjust, that would drive binary counters and then periodically shift these counts to small numeric displays before resetting themselves and restarting. There was no logging, no control, and very few features.

These products were a commercial reaction to a pressing need and a number of vendors sprang up to supply these and meet the growing demand. There were no standards as to how this should be done, with each company developing their own solutions based on this basic principle.

Over time features like logging, printing, external communication interfaces, more channels, smaller channels, etc. were added in response to client demands and in at-tempt to differentiate products.

This took a few decades and over that time these instruments had huge impacts on production yields and quickly became absolute requirements in every semiconductor cleanroom. An enormous appetite for semiconductors had created a huge need for these instruments. Eventually these products would find their way into all industrial cleanrooms (i.e. disk industry, life sciences, etc.). Of course, it only makes sense to measure what you’re trying to control and the more important that control is, the more critical the mea-urement becomes.

This took a few decades and over that time these instruments had huge impacts on production yields and quickly became absolute requirements in every semiconductor cleanroom. An enormous appetite for semiconductors had created a huge need for these instruments. Eventually these products would find their way into all industrial cleanrooms (i.e. disk industry, life sciences, etc.). Of course, it only makes sense to measure what you’re trying to control and the more important that control is, the more critical the mea-urement becomes.

At first semiconductor companies were created by mavericks developing products and bringing them to market, of-ten as fast as they could. They strove to provide process control but these companies were startups with limited re-sources, very short design cycles and large process geometries (by today’s standards). So, they could get away with instruments that were “reasonably accurate” and processes could be tuned to the instrument you had in hand.

However, as the semiconductor industry matured, geometries dropped, production volumes soared, and it became increasingly important to fine tune the manufacturing processes and in most cases transfer these processes offshore. In order to do that they could no longer rely on the instrument they’d used to fine tune the process on their pilot line, they had to be able to specify a process using parameters that could be measured using any ‘standard’ instrument. Unfortunately, no such standard existed.

A client could buy instruments from two separate vendors and find that particle counts between these instruments varied dramatically (dramatically enough to make it impossible to reliably transfer a manufacturing process). Even more worrisome, two instruments from the same vendor could also vary dramatically. So, significant pressure was applied to the vendors to come up with a solution to standardizing the manufacture of these instruments, so that a client could buy instruments from a variety of vendors and have them agree on particle density and distribution (by size) within an environment.

This process took some time, and initially a Japanese standard (JIS) emerged. This was then supplanted by an international standard (ISO 21501-4), which became a requirement for anyone supplying the cleanroom indus-try. The standard was created by the manufacturers in response to the above pressure and codified various tests required to create an instrument that would provide accurate air quality measurements.

It’s interesting to note that this standard initially made it impossible for any of the current manufacturers to ship a product that met the standard. Every one of them had to go through a significant redesign process in order to meet this new standard. It’s certain that some of the smaller manufacturers could not meet this standard and that over the years many potential manufacturers have been unable to clear this hurdle and bring credible products into the space.

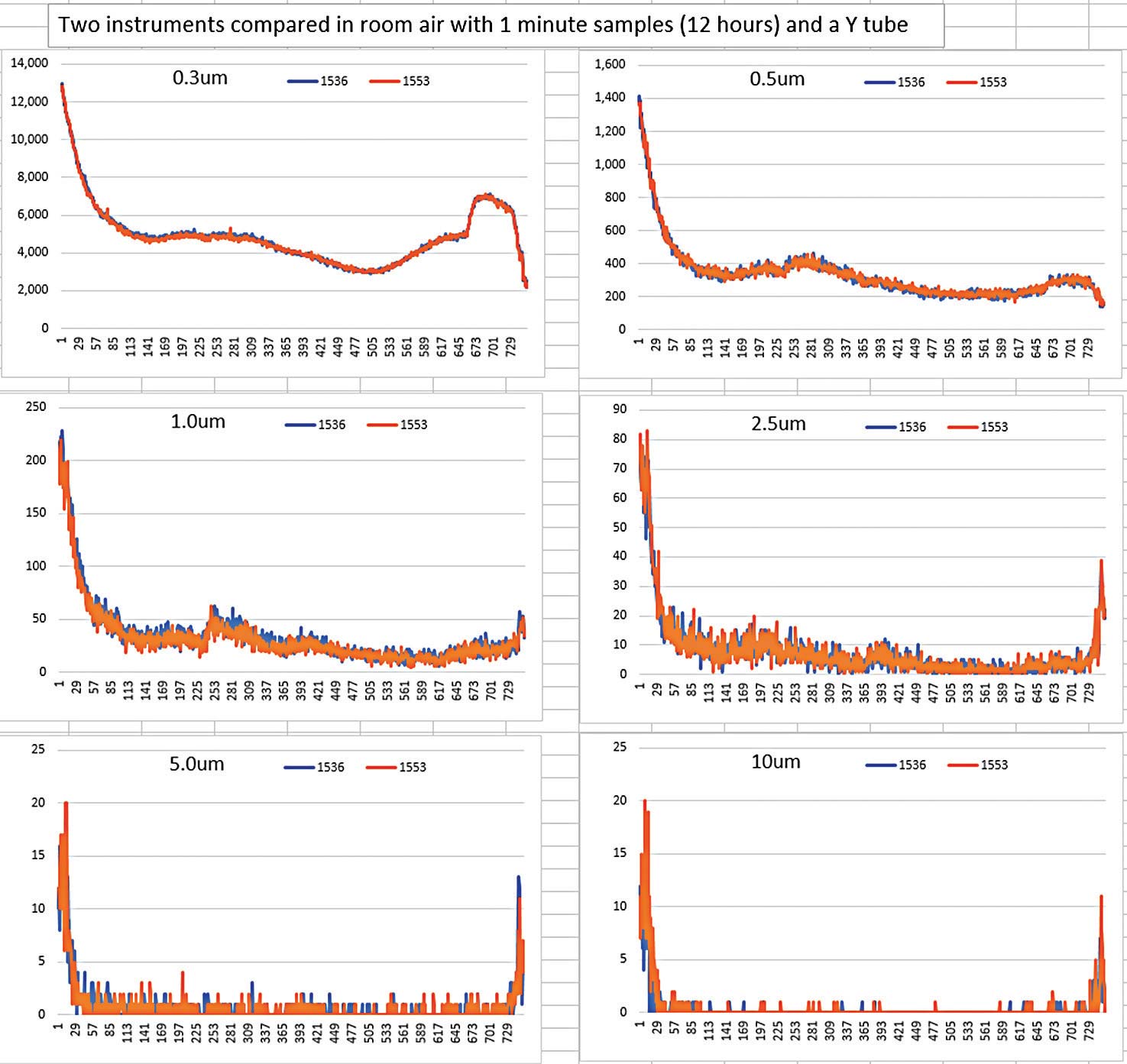

But, what this did was make it possible for instruments that met the standard to agree with a much higher degree of correlation on the particle counts by size within an environment. This made it possible for the cleanroom industry to rely on these instruments to control their processes. As an example, the figure below shows two instruments from our production line connected together by a tube with a Y in it to ensure they are sampling from the same air and run with 1 minute samples over a 12 hour period. We can see by the plots (each one showing a different size channel 0.3um through 10um). As we can see the instruments have a very high degree of correlation to each other in all channels.

Because of ISO 21501-4 all our instruments track similarly. And, with a high-quality calibration system we find that we instruments calibrated many months apart also track with similar correlation.

So, what does this mean for the commercial air quality space? It’s important to note that what drove this growth and the development of these instruments in the clean-room space was the enormous demand for these products. Because of that demand the industry grew quickly with whatever instruments were at hand being consumed by clients initially without much oversight or discrimination.

We believe that we’re seeing similar conditions to-day in the commercial air quality space. There is an enormous appetite for instrumentation and sensors to monitor air quality in many environments and applications. And, many of the vendors in the space are making unsupported or misinformed claims and selling entirely unsuitable products to unsuspecting clients who have no simple means to determine suitability. We believe that clients will soon demand that these instruments meet some air quality instrument manufacturing standard (yet to be defined). And, that doing so will certainly change the playing field and move us toward the day where a client can choose between various instruments based on features, price, service, etc. and be able to rely that adherence of that instrument to a manufacturing standard will ensure that they can rely on that instrument to provide them with accurate information.

In upcoming installments of this series, I’ll attempt to outline how ISO 21501-4 resolved the manufacturing issues, what some of the challenges are in using PM2.5 as an air quality standard and outline some thoughts on what a commercial air quality instrumentation and industry standards might look like.

This article, by Particles Plus, Inc.’s Chief Technology Officer Davis Pariseau, originally appeared in the January 2019 issue of Healthy Indoors magazine.

About the Author

About the Author

David Pariseau is an embedded systems design en-gineer with 36 years of development experience in con-sumer electronics, financial payment, medical devices, lab instrumen tation, industrial controls, and machine design. David was the original founder of Lighthouse Associates (now known as Lighthouse Worldwide Solutions) in 1985, Technology Plus in 1995 and SinoEV Technologies in 2009. He co-founded Particles Plus in 2010 which is focused on bringing quality products into the mainstream commercial air quality monitoring space. He can be reached at dpariseau@particlesplus.com